Ai Generated 3D Asset Generation as a method for increasing Agency efficiency.

Ai image generation has been taking the tech world by storm lately. Systems like MidJourney, Stable Diffusion, Dall-E 2, etc, are becoming ubiquitous almost as fast as new systems are being released. A brand-new set of systems made available last week led us to work on internal AI R&D. The age of AI-generated assets is here and it’s up to those of us working deep within the realm of innovation, to figure out how to use it.

As part of our Internal R&D, I focused on the generation of usable 3D assets, materials, and textures, using available and mostly open-source systems as a way to increase efficiency and make AI integrated Workflow available to the Design department. These AI-generated 3D Assets can be used in a plethora of ways, from reducing the time it takes to find or create materials for existing assets, or custom texturing a client asset quickly and in a way that allows for speedy adjustment based on Client Feedback, to the creation of game assets to be used in virtual spaces or virtual production, to finally, the creation of custom AI generated Furniture, Set Pieces, and other objects that can be fabricated in the real world.

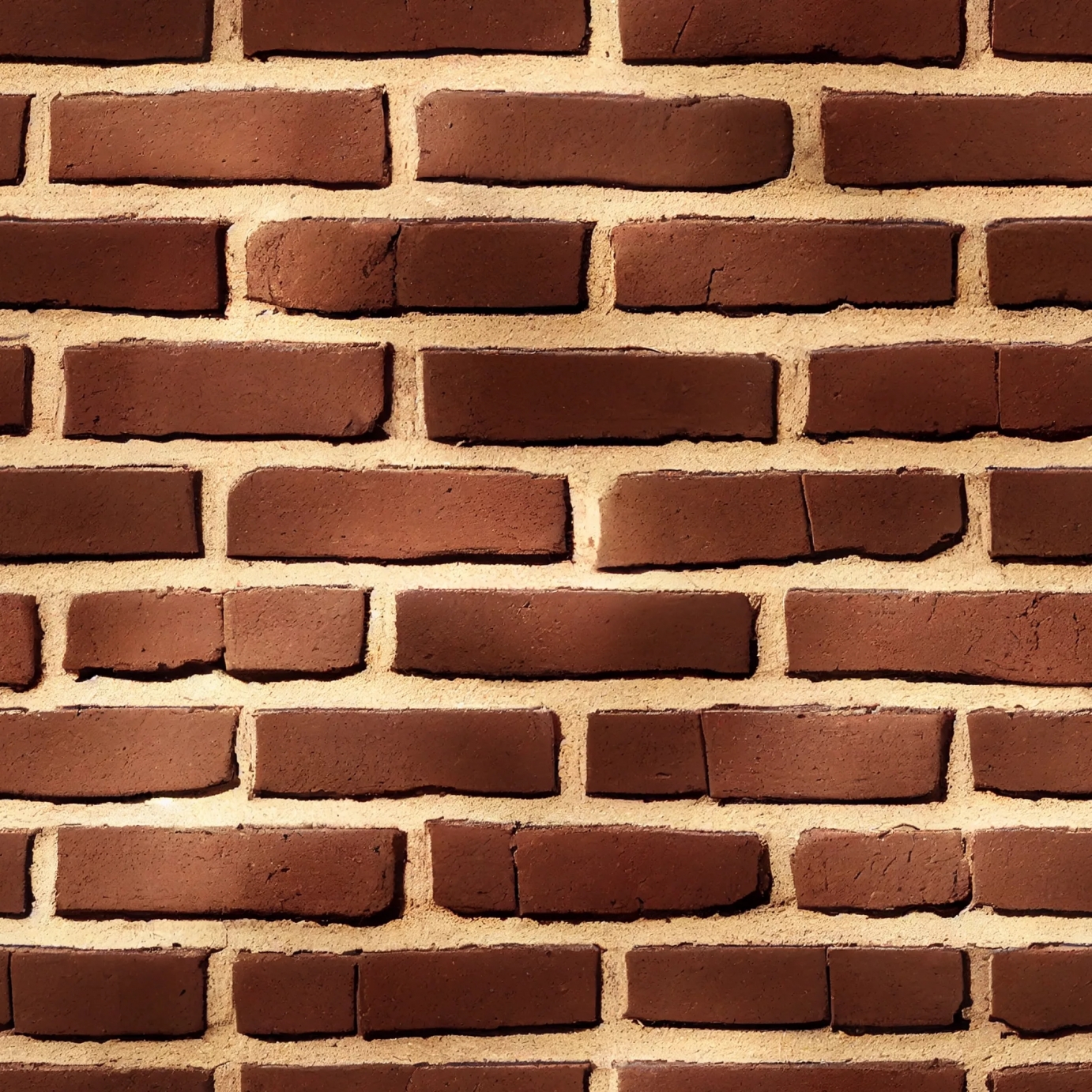

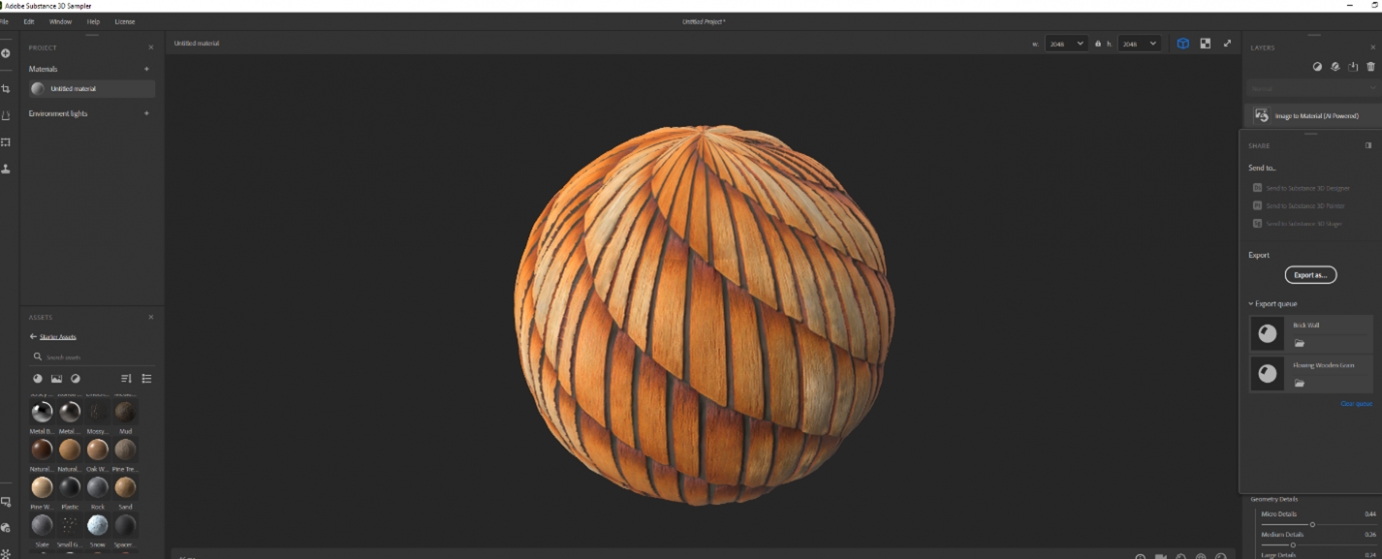

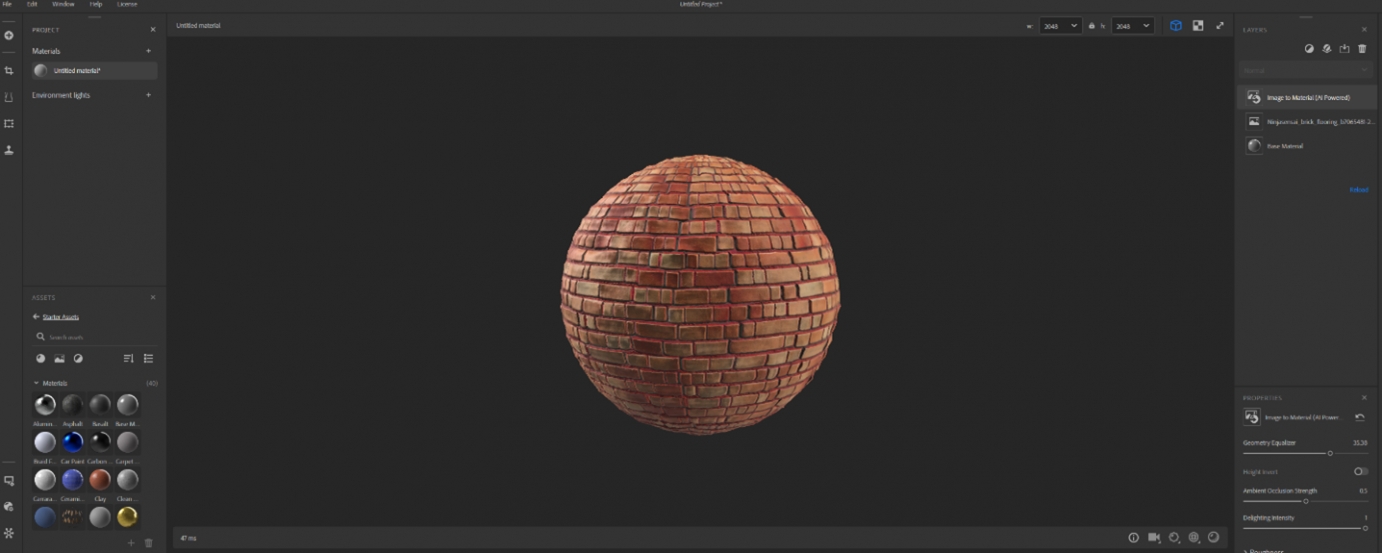

Creating custom AI generated Materials:

Using a Substance 3D Sampler in conjunction with AI image generation systems such as Stable Diffusion or MidJourney, it is possible to quickly make 3D materials. These materials can then be applied quickly and easily to existing assets, environments, and more.

Start by generating an image using either a text-based AI image generator or a system based on an input image. Once you have a workable image, usually created using the tiling setting, it’s as easy as creating your base material, such as concrete or brick in the drag-and-drop menu within Substance 3D Sampler. Once you have the base material, you can simply drag the AI-generated image onto your established base material and adjust the tiling settings until you have a workable textured material!

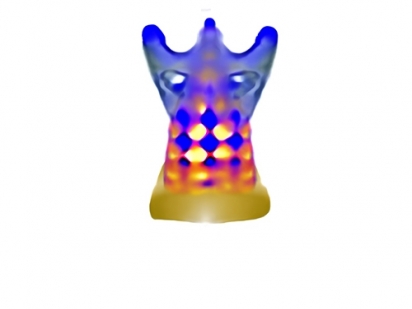

Creating Ai generated 3D models from Direct Text:

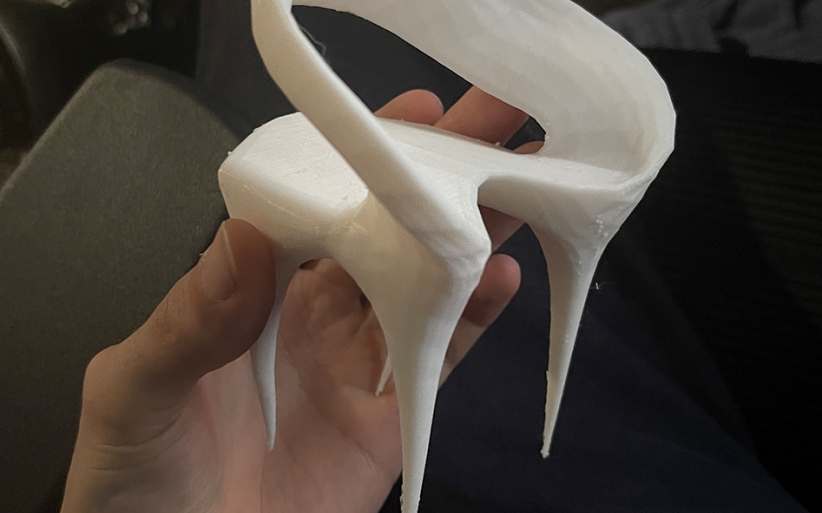

A brand new system made available on the 25th of September, about 2 weeks ago, is called Dreamfield3D. Dreamfield3D is an open-source system that allows users to input a sentence and generated a 3D model with Mesh, of what was written. During my R&D I focused on the generation of usable 3D Chess pieces, something fun and immediately usable. I picked Chess pieces as chess is a ubiquitous game, but also, from my cursory research, there hasn’t ever been an AI-generated Chess Set and I figured it was about time!

As of Today, DreamField3D’s most accessible entry point is using the Google Colab included in the Project’s Github. This Colab is pre-emptively set up to allow for easy asset generations and requires little technical know-how in order to get it up and running. Simply follow the instructions included, and be ready to be patient. Dreamfield works by generating hundreds of images of the same model, then stitching a 360 video together using all of the generated frames. This is finally passed along to a Marching Cubes Algorithm which uses the video to progressively make a more and more defined mesh over time. While this system is currently very slow to process, the results speak for themselves, being 3D Printable with only minor adjustments to the mesh, and working either on a CNC with Flip Cut capabilities or on a 3D printer (With lots of supports)! Because this system is so brand new, it’s only a matter of time before it becomes more accessible, locally operatable, and speedier to process.

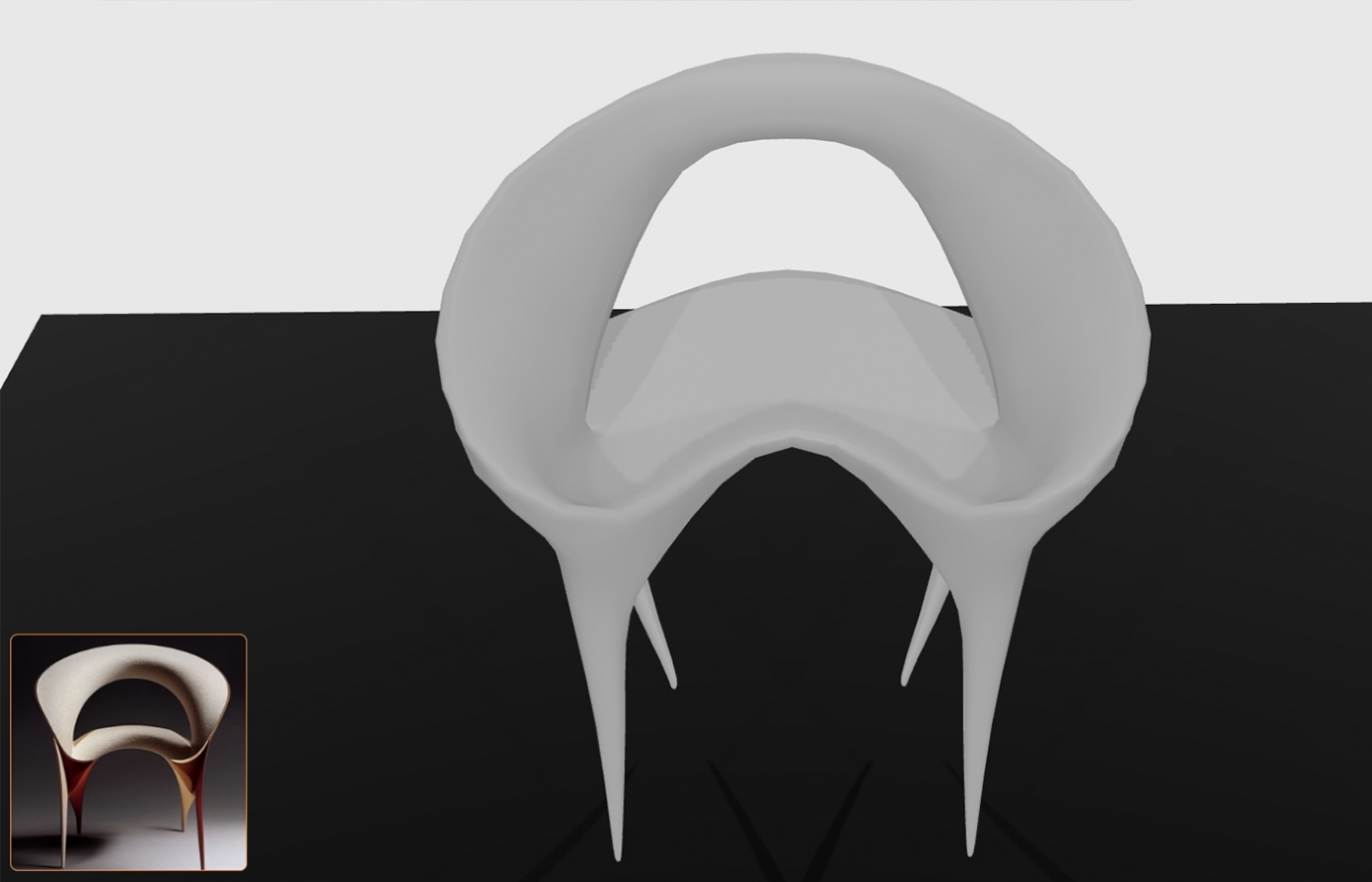

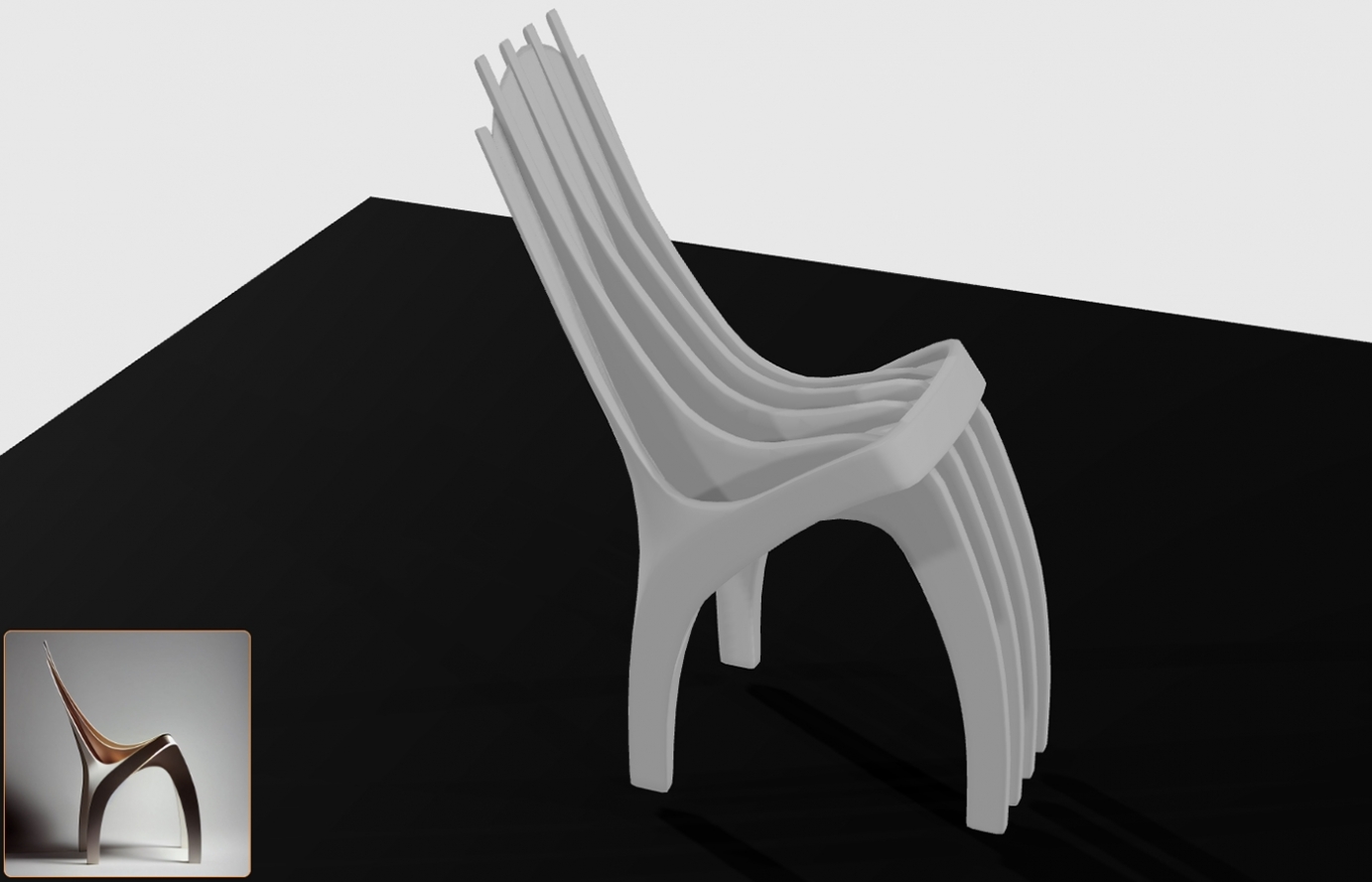

AI-Generated Furniture using single Image Input:

Something I’ve been working on outside of company time is a system for the generation of furniture using single image inputs generated by AI. This external R&D has been spent on finding the best and fastest method for the generation of AI-designed Furniture. Furniture that can be fabricated with minimal user tweaks and that is both aesthetically pleasing AND one of a kind.

The least Accessible AI 3D Asset generation technique is made possible using the paid, and closed source system known as Kaedim. Kaedim is one of the world’s first AI Asset generators, their AI can look at a single image and quantify its depth + additional information, to create a relatively high-resolution 3D Mesh.

I used this system with AI-generated Images in order to guarantee that the final furniture designed, is 100% AI-generated, with little to no human input. To do this, I used MidJourney to generate an assortment of AI-generated images of chairs, then curated the best selection of chairs and fed them into Kaedim’s backend system. I uploaded a single image, and within 20-30 minutes, have a notification letting me know the system has finished processing my model.

The results are consistently usable, easily printed, or converted into fabrication drawings, or just dragged straight into a game engine or virtual production engines such as Unreal or Unity. This immediate inter-functionality is one of the fundamental benefits of using AI in this way. The assets are delivered in whatever format you need, .fbx, .obj, etc, which further allows for tweaking, customization, and more.